Over the years, I’ve noticed a lot of people initially got into radio because they think the have “good ears.”

That means they know a hit when they first hear a song, which is truly something of a gift. Like picking stocks or betting on the ponies, the fact is, few really have this intuitive skills. But many of us think – or in a lot of cases, thought – this is a talent they have.

On of the reasons I first immersed myself in research was thinking of as a tool that would be something of a “gut adjustor.” Back at MSU, the only required course in my Masters degree program was TC 831 – Research Methods. Dr. John Abel was the gifted prof who taught this class. I came away thinking that if I was ever going to become a radio programmer, a background in audience research would be helpful. The class provided us with the tools to discern methodologies and data collection, as well as how to apply research findings to fundamental questions.

This training has served me well throughout my career, whether I’ve interpreting rating books, perceptual research, music tests, sales support materials, and anything else that provides data regarding radio and media usage.

I’ve gotten pretty good at looking at a report, and being able to get a sense for a study’s efficacy. And oftentimes, it starts with methodology – who was interviewed, and how and when the study was conducted. All of these variables play a role in determining the credibility and usefulness of research.

In most cases, the more research you see, the smarter you get – especially if you know your way around the numbers. But early on in one’s career, seeing research refute long-held beliefs can be jarring, disturbing, and yes, even heartbreaking.

When you learn the locals aren’t all that aware of a station or that they avidly dislike a song you love, or they don’t live to play your contests, it can be downright eye-opening and sobering. After a while, smart broadcasters begin to anticipate precisely what a study will tell them.

But there are always surprises. And that’s why you conduct the research to begin with. Better to get a little shocked in a conference room before the spring book, than to take a beating on the day the numbers are released.

That’s why I laugh (to myself, of course) when someone who’s sat through three hours of a data dump declares: “We really didn’t learn much. I already knew most of that.”

That’s why I laugh (to myself, of course) when someone who’s sat through three hours of a data dump declares: “We really didn’t learn much. I already knew most of that.”

Of course, that’s not true. Even if you see research annually for a brand, you still end up learning new things – especially if you keep your eyes open. Because while the data almost always shows you what has happened in the past, the smart, strategic minds in the room can connect the dots and come up with some pretty savvy action steps.

Like any other art, interpreting research takes time, along with experiencing varied situations. You see enough of these, and the pieces usually fall into shape. And the research stops breaking your heart. You can begin to predict certain outcomes. And when you’re surprised, you learn to stop taking it personally.

Perceptive programmers learn that research isn’t a referendum on their skills and talent. Instead, it’s a one-shot evaluation – like a photo of a moment in time. You see the data, you take a step back, you regroup, and you begin to create a plan that puts the research to work. In some cases, you weaponize it because you may be the only ones in your market with the ability to understand current conditions.

Still, it can be heartbreaking to learn that a survey of 400 target listeners doesn’t share your musical taste, doesn’t know who the midday jock is, and doesn’t think your station “has gotten better lately.”

These days, more research is being conducted by more and more firms, organizations, universities, and other groups. So, it becomes even more important o scrutinize all the factors – the moving parts that contribute to a study’s validity as well as the things that are erosive or even questionable.

JacoBLOG readers know we’ve been talking a lot about the changing nature of buying (or leasing) a new car. One of the hot topics is “microtransactions.” This is where automakers hope to market subscription services to car buyers. Things like heated seats, premium sound systems, and a host of other features. At CES, the Consumer Technology Association’s VP/Research Steve Koenig speculated that “radio” could one day be offered as a subscription, referred to as FaaS – Features as a Service.

services to car buyers. Things like heated seats, premium sound systems, and a host of other features. At CES, the Consumer Technology Association’s VP/Research Steve Koenig speculated that “radio” could one day be offered as a subscription, referred to as FaaS – Features as a Service.

As you might imagine, the auto industry loves the idea of recurring revenue. So the question is, how do consumers feel about this idea – paying a monthly fee for a heated steering wheel or Bluetooth connectivity?

So, when I ended up with two conflicting reports – in my email box at the same time – it had my interest. Once again, who did the research, who did they talk to, when did they survey their sample, and how did they ask the questions?

The first study trumpeted this two car ordering model – one where you negotiate the price and your monthly payments; the second where you check off all the additional features you’re willing to pay for monthly.

While various automakers are going about this differently, these FaaS – or Features as a Service – can take a monthly subscription fee for heated seats, premium sound system, streaming video, and others yet to come. Some of the experts we’ve talked to believe that AM/FM radio could one day be considered a FaaS.

But how do consumers feel about this new model?

It depend which research you read and who publishes it. Consider these two headlines, both arriving in my email inbox on the same daay:

In some ways, this is worse than flipping back and forth between Fox News and MSNBC during the State of the Union Address. Unlike political pundits blathering and opining over what they just saw, we’re looking at two researchers with some credibility – McKinsey on the pro side of the ledger versus Auto Pacific.

Now, you’d think one major factor revolves around who they talked to. The McKinesey study is comprised of Europeans (France, Germany, and the UK). Auto Pacific’s research was conducted among Americans. But geography had less to do with the differences – or similarities – between the two studies.

If you read a bit between the lines, the Auto Pacific study, as reported in Ars technica, isn’t altogether negative. It points out this new model appeals more to prospective EV and PHEV (plug-in hybrids owners, as well as younger consumers.

Still, what do we make of two conflicting stories on the same topic?

A little digging shows the two surveys established very similar findings – the devil was in the interpretation. It’s not that either research study is necessarily flawed; it’s how analysts read the data.

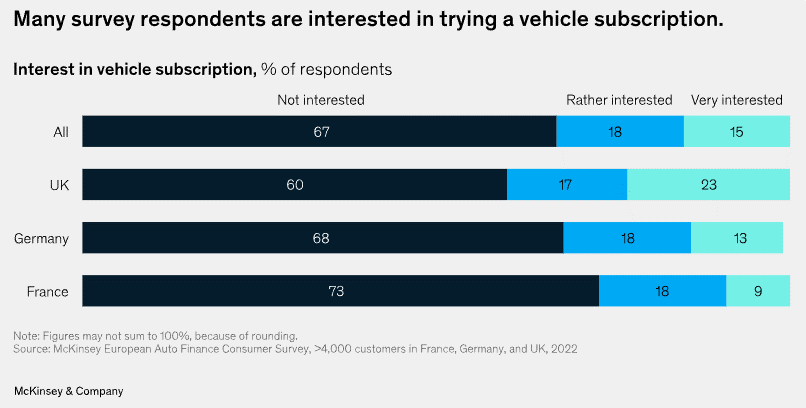

On the pro side, McKinsey looked at roughly one-third of their respondents as a model that has “gain(ed) importance. They also note younger consumers show higher interest.

A look at the chart brings us back to that famous glass of water. In Germany and France, roughly two-thirds aren’t interested in these monthly features. But those who were “very” or “rather interested” caught McKinsey’s attention, and thus, the positive spin.

Note that car buyers in the UK are the most enthusiastic. Nearly one in four (23%) say they’re “very interested” in vehicle subscriptions. Obviously, Brexit took its toll.

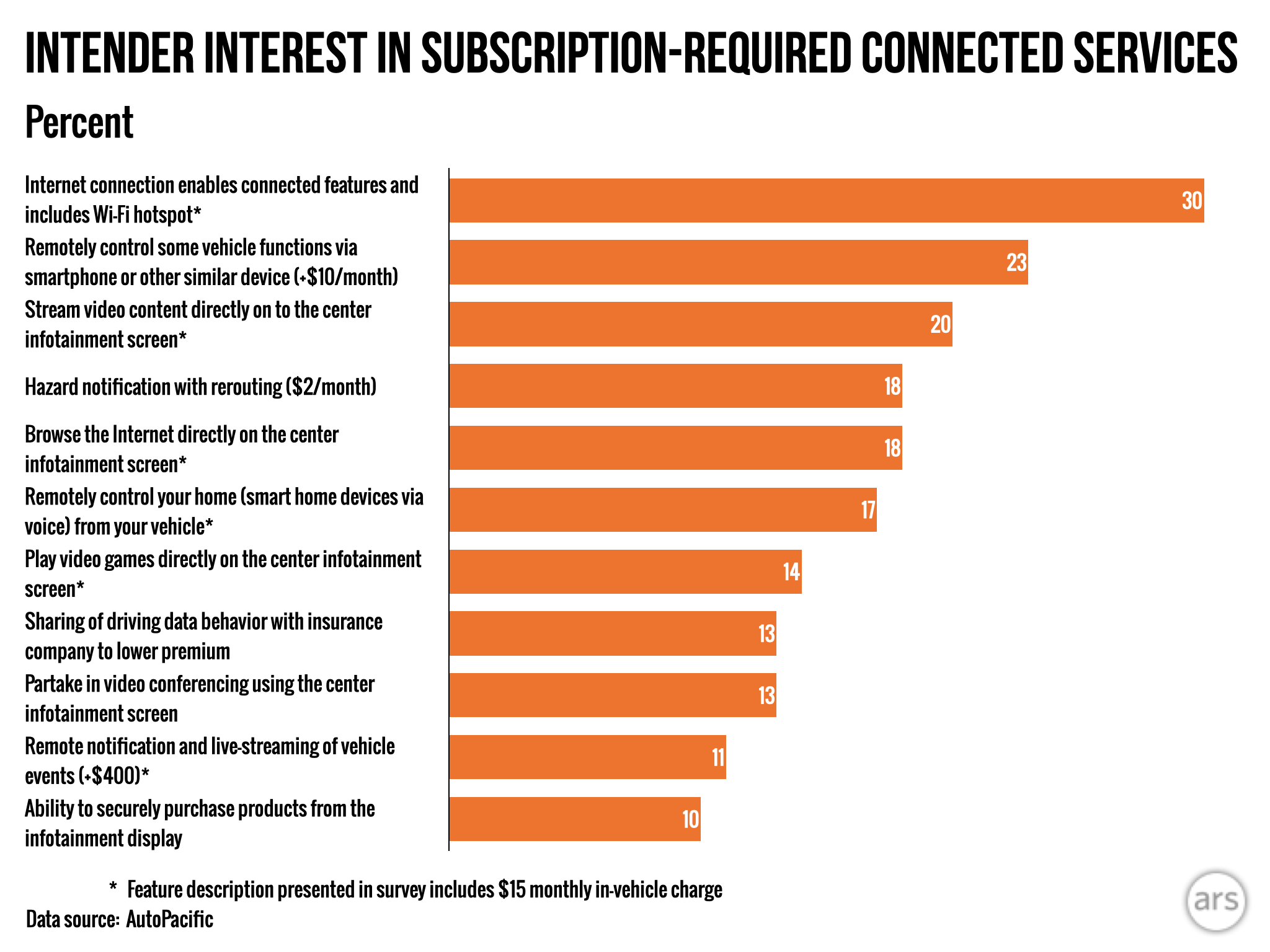

Over in the USofA, Ars technical flips the numbers, nothing that “only 30% of people looking to buy a new car said they were interested in paying for their car’s Internet access” – the most-desirable of the FaaS options tested;

So, similar findings, but wildly different conclusions. And that’s another reason why marketers, programmers, managers, and owners need to tread lightly when reviewing data.

The numbers can be turned (or manipulated) in myriad directions. And that’s why we all should proceed with a bit of caution.

If you’re left reading these two studies, and wondering whether these subscription extra services stand a chance with catching on with consumers, I feel your pain.

Let me offer my interpretation, based on research we see – among core radio listeners in the U.S. and Canada. We’ve been asking about this idea of “peak subscription,” wondering whether consumers are tiring of those escalating monthly fees.

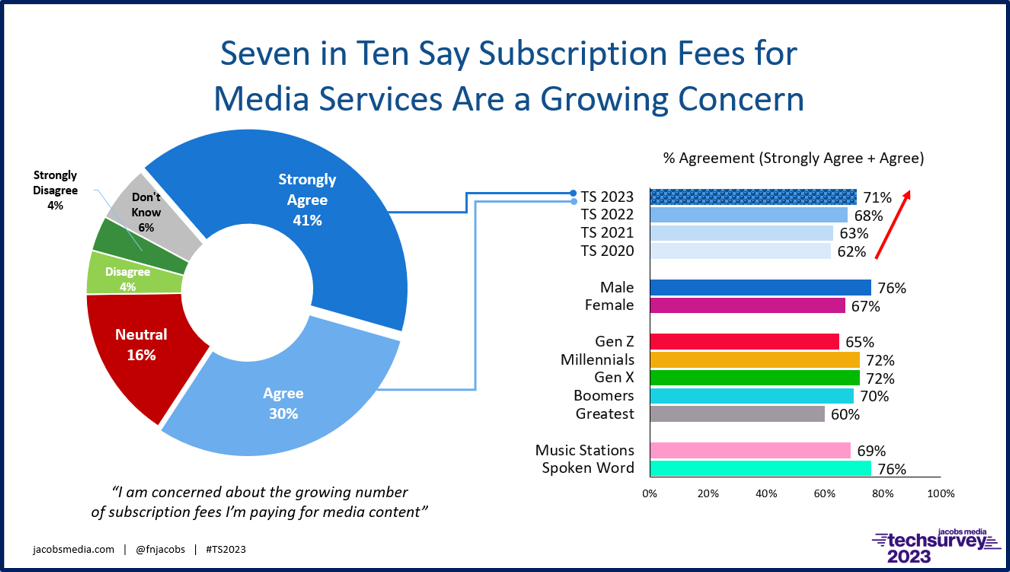

Here’s a trended chart from the soon-to-be-released Techsurvey 2023.

The four-year trend with my accompanying red arrow tells the story. This isn’t a one-time snapshot – this is an annual look at the same question, asked the same way. And as you can see, the percentage of those expressing concert about the rising costs of subscription fees for media content continues to rise, hitting a new high of 71% with the new study. True, this isn’t about cars, car buyers, or FaaS, but it does tightly revolve around that feeling of being nickled and dimed to death by growing numbers of businesses and organizations. Even charities and nonprofits should scrutinize this chart carefully, questioning whether the monthly fee structure has begun to sour.

From a radio broadcasting standpoint, these findings shed some light on whether carmakers will, in fact, charge for their inclusion in dashboards of the future – and get away with it. Of course, several are eliminating AM radio from their vehicles – and not ever offering it as a FaaS.

But in the final analysis as subscription fees mount, the silver lining for broadcast radio may come down to one of its super powers – something we see test through the roof in Techsurvey each year:

It’s free.

You can’t argue with those findings.

The other lesson here, of course, is to use your head when looking at data. Do you already possess some knowledge and experience with the situation that even the research analyst doesn’t have? How can that inform the data, and help the key players around the conference room table better understand what’s really going on.

- The Hazards Of Duke - April 11, 2025

- Simply Unpredictable - April 10, 2025

- Flush ‘Em Or Fix ‘Em?What Should Radio Do About Its Aging Brands? - April 9, 2025

Looking over the horizon: Fred’s point about data being a snapshot of a moment in time is important. Like a blood pressure reading, even the most wide ranging report of vital signs represents the now. Jim Collins (Good to Great) adds another wrinkle. What’s the bottom line? Radio, including transmitters and streamers is a business ecosystem. It has an aggregate cost per hour / day / week / year that is hopefully offset by enough revenue to provide enticing margins to potential investors. What Jim calls our economic denominator is the bellwether. It’s what’s behind the massive layoffs everywhere from Amazon to Sirius/XM.

As we’ve seen with the rise of tech, unexpected disruptions (like Covid) can be game changers, messing up the extrapolations of the best minds.

That’s why I celebrate visionaries with the courage to imagine what’s over the horizon. I had a gifted boss who challenged us to envision the capabilities we needed to compete ten years from now, and to begin investing in research and development to act now as the company we hoped to become.

That attitude can be problematic in an atmosphere where year-over-year quarterly earnings rule and experiments are among the first to feel the accountant’s sithe But it’s essential.

What if we lose our spot in the automotive center stack? What if we lose compulsory license and have to start paying for music per spin? What if Spotify gets its AI DJ act together?

Imagining what success looks like through that prism, combined with the valuable snapshots research provides can be a beam of light in the darkness of the unknown.

Thanks for this thoughtful comment, Scott. I was lucky to be around radio pros who taught me how to think and ask questions. It sort of reminds me of Professor Kingsfield and the “Socratic Method.” But that’s how you use the data and use it, aa you say, to look over the horizon. As we’ve learned in the past couple of decades, nothing stays as it was. Appreciate it.

Great points, Fred! Like you, I have spent my career as a researcher and programmer and I often stunned by a Programmer’s reaction and expectation during a research project. I remember one instance when conducting a music test, followed by a focus group, when the PD came up to me and said, “These are clearly not my listeners!! Where did they come from? They don’t look or talk like my listeners!” Before seeing any results this person new exacty what the listeners should look like, sound like, and dress like. The fact is, the results greatly favored the station. I wasn’t upset or angry that my own integrity, or that of my company’s was questioned. In fact, it made me wonder if that was even possible? Are they actually the proper sample we built? So, we dug in even deeper to satsify any concerns. The respondents did indeed represent the proper sample. With every research study we conducted, there was often an expected measure of doubt cast by someone during the results presentation. “These aren’t my listeners!” “That is not possible!” Most often though, the wisest and most experienced clients sat and listened thoughtfully, and said something like, “Wow! I didn’t know that. Looks like we have work to do.” I remember one brilliant PD you and I both know, whom I miss terribly would always turn to the methodology and sample pages first. I recall after one presentation, I asked him what he thought? He said, “Well, this is why we do the research. To find out that what we think we already know is not always aligned with reality and what we really need to know.”

For me, a trip to the license bureau is always learning experience. Take a look around the room. It just might be the best random sample of media consumers you’ll find.

Truly a slice of real life – people in dire need of a little entertainment.

I appreciate the perspective, and I agree with your assessment of that brilliant PD. He made research studies interesting. He pushed, he questioned, he tested, and he also had fun. And by the time the process was over, we all had confidence we put ourselves and the audience through the paces. Thanks, Bob, and for reminding me of “These aren’t our listeners.”

Wow, you must be reading my mind. I just had a conversation with someone at a station who expressed concern that their colleagues are making major, strategic decisions JUST from looking at the tech survey, as if that’s the only piece of research they have. I thought “really? Are we not training programmers to think critically, to look at ALL of the indicators on their dashboard to make decisions, not just one?”. I hear inexperienced programmers make all kinds of wild assumptions when they look at their data, trying to understand what is driving the behavior of their audience without considering their own programming decisions. “Must be news fatigue, let’s throw some music programming in there” instead of looking at a number of factors, including their own programming choices, that could be repelling listeners. DO THE HOMEWORK!! Look at all the data you can get your hands on and then do some original research. You’ll never know the answer to the question about audience motivation unless you ask.

In some ways, Abby, it’s a lost art. And as I learned early on, you don’t need to be a research expert. You need to learn how to ask the right questions. Thanks for this.

Your first research project is like Neo taking the red pill in the Matrix.

Actually, it’s like taking both of them!

Haha!

4 out 5 dentists surveyed recommend sugarless gum for their patients who chew gum.

That’s 80%!

Guess I better use sugarless gum.

Sent with humor.

And better yet, start flossing.