Celebrity impressions have been a staple of radio morning shows for decades. When a morning show is fortunate enough to find somebody who can do accurate and entertaining impersonations, it can be a gold mine. But with the new advancements in artificial intelligence (AI), it’s now possible for anybody to impersonate a celebrity.

I’ve tried it using Eleven Labs’ Speech Synthesis, which is currently in Beta. As of this writing, you can create three custom voices for free, ten for $5 per month, and 30 for $22 per month.

Here’s how you do it:

1. Find Clean Audio of the Celebrity

First, you’ll need a clean audio sample that the AI can use to “learn” the celebrity’s voice. Eleven Labs requires at least sixty seconds of audio with as little background noise as possible.

I head to YouTube and search for an interview with that celebrity. Once I find one, I use ClipGrab to rip an MP3 audio file from the video. Alternatively, you could see if the celebrity has been a guest on any podcast episodes and grab the audio from there.

Example: Ailsa Chang

To give you an example, let’s use Ailsa Chang, a host of NPR’s All Things Considered. I know Ailsa from high school. While we didn’t go to the same school, we were in the same speech and debate league, so we would often see each other at tournaments.

We were both Lincoln Douglas debaters. Once, we even faced off in a debate round. She kicked my ass. (In my defense, she beat just about everybody. Ailsa was a very, very, very good debater.)

Still, I harbor a grudge. So as payback, we’ll use her for voice for this exercise. A quick search on YouTube surfaced an interview that Ailsa did on the PBS News Hour many years ago:

2. Edit out everything but the celebrity’s voice.

Once you have an audio file, import it into your favorite editor and cut it down to 60 seconds with only the celebrity’s voice. If you’ve got enough material to work with, you can create multiple samples. Bounce them down to MP3 files.

3. Have the AI “Learn” the Celebrity’s Voice

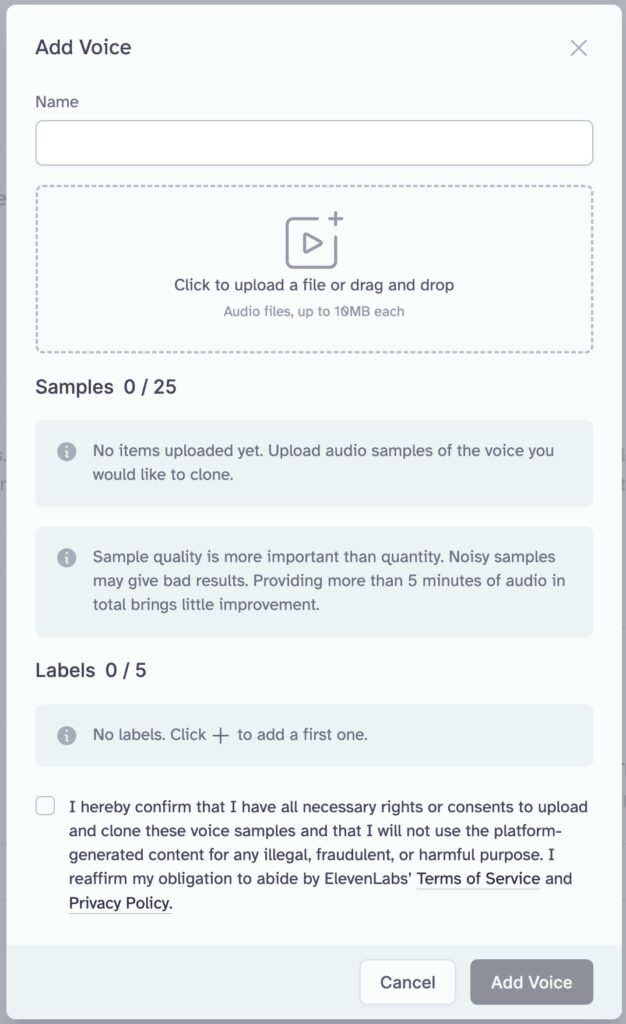

Create an account with Eleven Labs, log in, and click the “Add Voice” button. This box will open up:

Name your voice and upload your sample. You can upload multiple samples if you created them. If you want, you can apply a label to the voice.

If you check the box affirming that you have the rights to use this voice, you’re probably lying, and that’s on you, not me. We’ll discuss that more in a moment.

Click the “Add Voice” button.

3. Put Words in the Celebrity’s Mouth

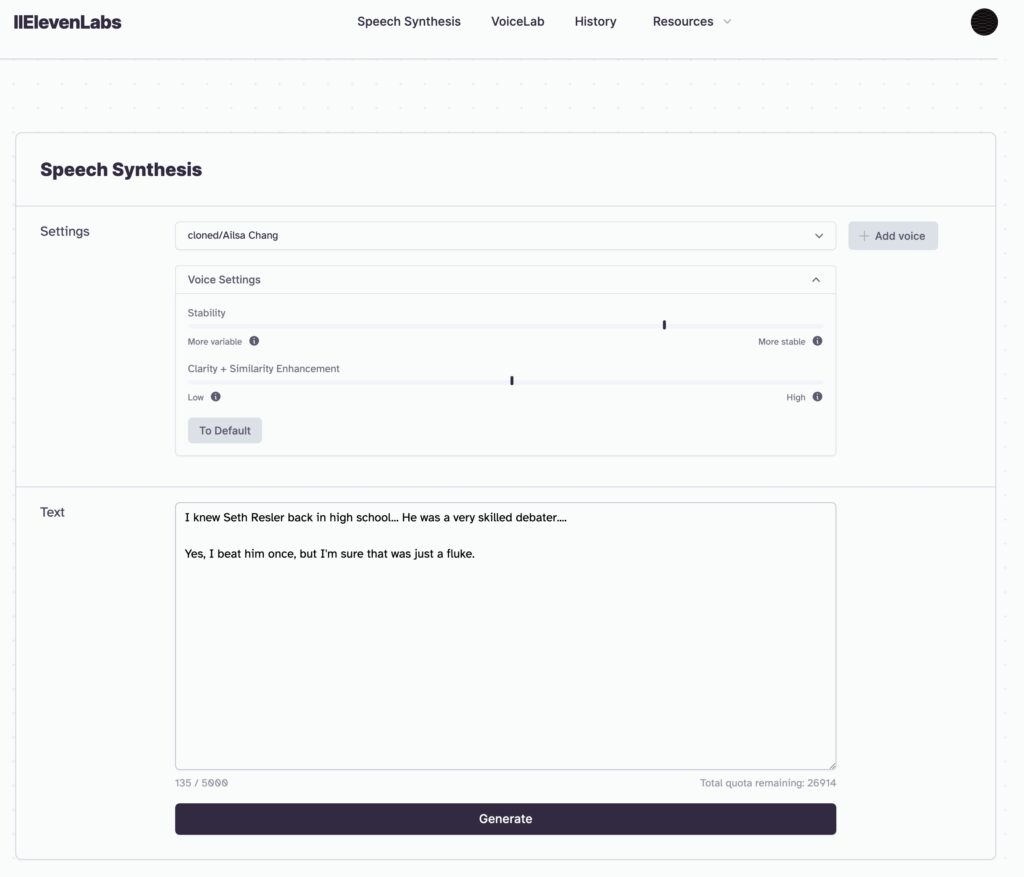

The voice will now appear in your VoiceLab dashboard. Click “Use” beneath the name of the voice and a new page titled “Speech Synthesis” will open up:

Here, you can adjust the voice settings and then type in the text that you want it to say. I have found that you need to play with it to get it right, sometimes adding ellipses when there should be a pause, or phonetically spelling out some words. You can listen to the audio output before you download it, and it may take you a few tries to get the results that you want.

In this case, I’m going to have Ailsa set the record straight about her debate win over me. Listen to the final result:

Fake Ailsa Chang

That’s it! Easy, right? Also: Scary, right?

Example: Tom Griswold of The Bob & Tom Show

Let’s try one more, this time with an example from the world of commercial radio. Tom Griswold hosts The Bob & Tom Show. There are plenty of videos from Tom’s nationally syndicated morning show on YouTube to choose from, but I went with this one:

It was a little harder to stitch together a clean audio sample because the show features a slew of co-hosts who keep each other laughing, but I was able to pull together a minute of just Tom’s voice and upload it to Eleven Labs.

I never debated Tom in high school, so I wasn’t sure what to make him say. In the end, I had him read a list of Ellie’s puns from episode 4 of HBO’s The Last of Us. Take a listen:

I never debated Tom in high school, so I wasn’t sure what to make him say. In the end, I had him read a list of Ellie’s puns from episode 4 of HBO’s The Last of Us. Take a listen:

Fake Tom Griswold

How good is this impersonation? The real Tom Griswold was kind enough to read the exact same puns so you can compare the two:

The Real Tom Griswold

I can clearly hear differences in the audio, but I can’t tell whether that’s due to limitations in the AI software or simply differences in the microphone setups between the two recordings. So I did one final test. This time, I used had the AI use the audio sample above to learn Tom’s voice, then I gave it a different script. Here’s the result:

Fake Tom Griswold #2

Well, whaddya think? Would it fool you?

How Radio Could Use AI Voices

Now that I’ve taught you how to do this, let’s talk about whether you actually should. I can immediately think of several scenarios in which a radio broadcaster might want to use this technology:

- A morning show wants to conduct a fake interview with the celebrity.

- The radio station wants to create a recorded promo for a contest using the celebrity’s voice. (Example: “This is Taylor Swift, and all this week, you can win tickets to my concert at the ACME Pavilion by listening to WKRP.”)

- A client wants the radio station to produce a commercial using the voice of a celebrity. (Example: “This is your homeboy Snoop Dogg, and whenever I’m at a cookout, fo’ shizzle I drizzle some Jack’s BBQ sauce on my burgers. Right Martha?” “That’s right, Snoop.”)

Yes, but is this legal?

So many questions! What if the technology is used to impersonate a celebrity’s voice but doesn’t explicitly identify the celebrity? Does it make a difference if the celebrity is no longer alive?

For answers, I turned to Belinda Scrimenti, a partner at Wilkinson, Barker, Knauer, LLP who specializes in trademark issues. Here’s what she told me:

These AI scenarios all potentially raise some trademark and copyright issues, but the biggest issues are those under what is called “the right of publicity.” Legal issues concerning the right of publicity vary from state to state, but generally they prohibit the use of a celebrity’s name, likeness, or other identifiable traits — including voice — without permission, particularly when used for commercial or promotional purposes. The estates of Elvis Presley and James Dean were forerunners in advancing state legislation to protect publicity rights, and the laws that these estates pushed to be adopted in Tennessee and Indiana have prompted many other states to adopt similar laws. Although these laws have existed for years for “real-world” use, they are likewise applicable to the virtual world and artificial intelligence.

Something that is so “over the top” that it obviously is humor and fake may be covered by a concept similar to the “fair use” doctrines in both copyright and trademark law. Like parodies under copyright law, where there is a comedic impersonator making fun of the celebrity whose voice is being impersonated, that may be permissible; but be cautious: as if used in a commercial manner, even a comedic use may be found to be a problem.

There can also be copyright violations (if a series of words or phrases were directly used and protected by the celebrity) or trademark/unfair competition, for false association with or endorsement by the celebrity. Many celebrities now have trademarks on or associated with their names.

Even celebrities who have died may still have rights in their names, voices, and other likeness – that all depends on state law. But note that many have registered trademarks to protect rights in the name, and anything that is run online may subject you to liability in a state where protections are afforded to celebrities after their deaths.

In short, there are lots of issues to look at in any use of a celebrity’s voice on the air – whether impersonated, edited or “created” by AI – so proceed with caution and talk to your lawyer!

Of course, there’s also another issue to consider: If you’re reading this, you’re probably a radio broadcaster, and perhaps there are a ton of recordings with your voice floating around the internet. So just as you can impersonate others, it’s easy for others to impersonate you. How would you feel if somebody impersonated you without permission? Do unto others…

At the end of the day, it’s not my job to tell you whether or not you should commit crime; it’s only my job to show you how. But just remember, as Spider-Man’s Uncle Ben once said, “With great power comes lots of nachos.”

No, really! He did! I’ve got the recording right here…

Special thanks to Ailsa Chang and Tom Griswold for agreeing not to sue me. Also, thank you to David Oxenford, who has written about how AI is affecting media and music companies in his Broadcast Law Blog.

Update 4/3/23: David Oxenford has expanded on this topic in the Broadcast Law Blog. You can read more here.

- A Simple Digital Treat to Thank Your Radio Listeners This Thanksgiving - November 13, 2023

- Interview Questions When Hiring Your Radio Station’s Next Digital Marketing Manager - November 6, 2023

- A Radio Conversation with ChatGPT: Part 2 – Promotions - October 30, 2023

Remember, while there are some situations where impersonating a celebrity voice might be appropriate or legal, such as in a satirical or comedic context, it’s still wrong to impersonate a celebrity just for nachos.

At least according to ChatGPT, which wrote the above paragraph! I suppose one day we’ll have only AI voices on the radio entertaining the Alexas and Siris and ChatGPTs of the world–the only listeners left. (But hopefully not for a long, long time…)

Lol, thanks David! I cannot endorse taking legal advice from ChatGPT.

cooked!

I’ve been having a celebrity talking to me with AI video and on the voice text. How do I know if this is the real person?